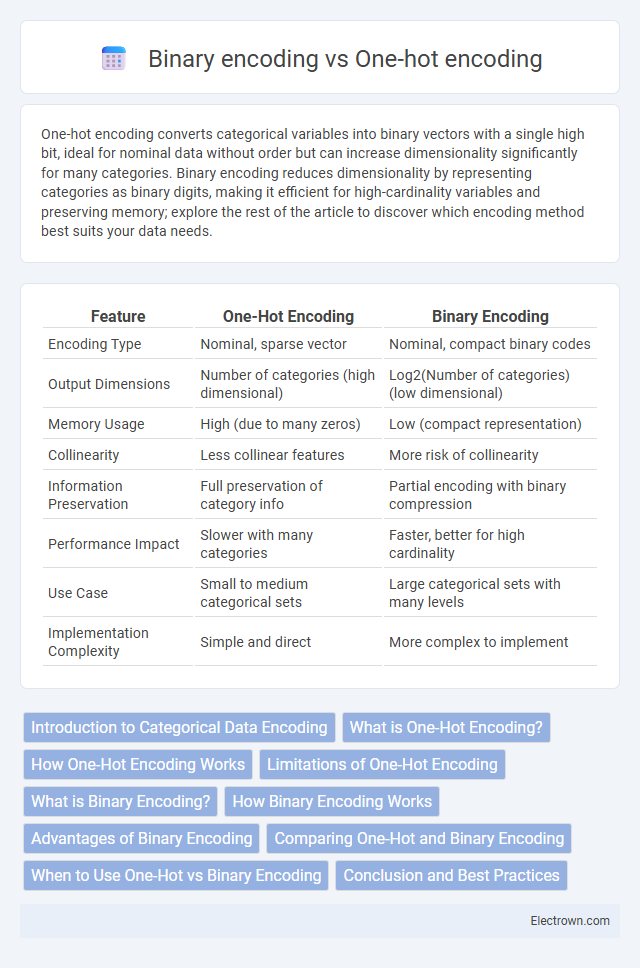

One-hot encoding converts categorical variables into binary vectors with a single high bit, ideal for nominal data without order but can increase dimensionality significantly for many categories. Binary encoding reduces dimensionality by representing categories as binary digits, making it efficient for high-cardinality variables and preserving memory; explore the rest of the article to discover which encoding method best suits your data needs.

Table of Comparison

| Feature | One-Hot Encoding | Binary Encoding |

|---|---|---|

| Encoding Type | Nominal, sparse vector | Nominal, compact binary codes |

| Output Dimensions | Number of categories (high dimensional) | Log2(Number of categories) (low dimensional) |

| Memory Usage | High (due to many zeros) | Low (compact representation) |

| Collinearity | Less collinear features | More risk of collinearity |

| Information Preservation | Full preservation of category info | Partial encoding with binary compression |

| Performance Impact | Slower with many categories | Faster, better for high cardinality |

| Use Case | Small to medium categorical sets | Large categorical sets with many levels |

| Implementation Complexity | Simple and direct | More complex to implement |

Introduction to Categorical Data Encoding

Categorical data encoding transforms non-numeric categories into numerical formats suitable for machine learning algorithms. One-hot encoding creates binary columns for each category, ensuring no ordinal relationship but increasing dimensionality with many unique values. Binary encoding compacts categories into fewer columns using binary code representation, reducing dimensionality while maintaining distinguishability, which can be advantageous for your datasets with high-cardinality categorical features.

What is One-Hot Encoding?

One-hot encoding transforms categorical variables into binary vectors, where each category is represented by a vector with a single high (1) value and zeros elsewhere. This method preserves the uniqueness of each category without implying ordinal relationships, making it ideal for algorithms requiring independent feature inputs. Your data preprocessing can benefit from one-hot encoding by effectively converting nominal categories into a machine-readable format without introducing bias.

How One-Hot Encoding Works

One-hot encoding transforms categorical variables into binary vectors where each category is represented by a unique vector with one element set to 1 and all others set to 0. This process creates a sparse matrix, preserving the original categorical information without implying any ordinal relationship. It is especially useful for algorithms that require numerical input while avoiding the pitfalls of arbitrary numeric assignments.

Limitations of One-Hot Encoding

One-hot encoding creates high-dimensional sparse vectors, which can lead to increased memory usage and computational inefficiency, especially with features that have many categories. It also ignores any ordinal relationships or similarities between categories, potentially reducing model performance on certain tasks. Your choice of encoding method should consider dataset size and category cardinality to avoid these limitations.

What is Binary Encoding?

Binary encoding is a technique for converting categorical data into a compact numerical format by representing each category as a binary number, reducing dimensionality compared to one-hot encoding. Unlike one-hot encoding, which creates a separate binary variable for each category, binary encoding uses fewer columns by encoding category indices into binary code. This method is especially efficient for datasets with high cardinality categorical features, optimizing memory usage and computational speed while preserving category distinctiveness.

How Binary Encoding Works

Binary encoding transforms categorical variables by converting each category into a binary representation, significantly reducing dimensionality compared to one-hot encoding. Instead of creating a separate column for every category, binary encoding assigns unique binary codes to categories, which are then split into multiple columns representing bits. This method preserves essential category information while optimizing memory and computational efficiency, making it ideal for datasets with high cardinality.

Advantages of Binary Encoding

Binary encoding reduces dimensionality by converting categorical variables into fewer binary digits compared to one-hot encoding, which creates a separate column for each category. This compression decreases memory usage and computational complexity, making binary encoding more efficient for high-cardinality datasets. It also helps mitigate multicollinearity issues common in one-hot encoded features by generating fewer correlated variables.

Comparing One-Hot and Binary Encoding

One-hot encoding represents categorical variables as binary vectors with a single high bit, making it ideal for nominal data with low cardinality but often resulting in high dimensionality. Binary encoding reduces dimensionality by converting categories into binary numbers, which suits datasets with high cardinality and improves computational efficiency. Choosing between one-hot and binary encoding depends on your dataset size and computational resources, balancing interpretability and performance.

When to Use One-Hot vs Binary Encoding

One-hot encoding is ideal for categorical variables with a relatively small number of unique categories, as it creates a sparse matrix where each category is represented by a distinct binary vector, making interpretation straightforward and suitable for algorithms like logistic regression and neural networks. Binary encoding is more efficient for high-cardinality categorical data, reducing dimensionality by converting categories into binary digits, which minimizes memory usage and often improves model performance with algorithms sensitive to high-dimensional data, such as tree-based models. Your choice depends on the dataset's cardinality and the model's sensitivity to feature sparsity and dimensionality.

Conclusion and Best Practices

One-hot encoding is ideal for categorical variables with a small number of unique categories, providing clear interpretable binary vectors without imposing ordinal relationships. Binary encoding reduces dimensionality and memory usage in datasets with high-cardinality categorical features by representing categories in binary format, improving performance in tree-based models. Best practices recommend choosing one-hot encoding for low-cardinality features and binary encoding for high-cardinality features to balance model interpretability and computational efficiency.

One-hot encoding vs binary encoding Infographic

electrown.com

electrown.com