Propagation delay refers to the time it takes for a signal to travel through a circuit from the input to the output, while contamination delay is the minimum time before the output begins to change after an input transition. Understanding these delays helps you optimize digital circuit performance, so continue reading to explore their impact in detail.

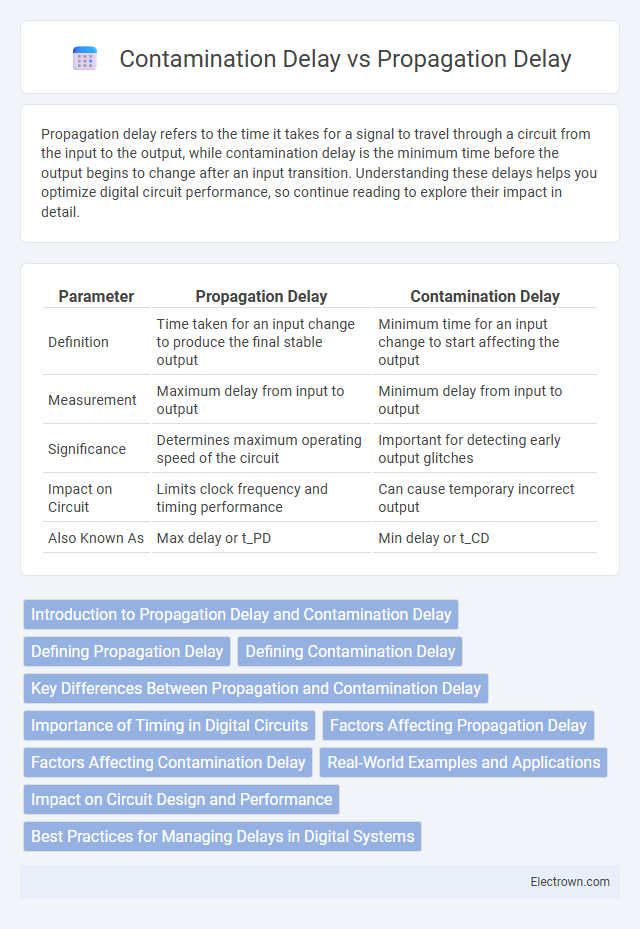

Table of Comparison

| Parameter | Propagation Delay | Contamination Delay |

|---|---|---|

| Definition | Time taken for an input change to produce the final stable output | Minimum time for an input change to start affecting the output |

| Measurement | Maximum delay from input to output | Minimum delay from input to output |

| Significance | Determines maximum operating speed of the circuit | Important for detecting early output glitches |

| Impact on Circuit | Limits clock frequency and timing performance | Can cause temporary incorrect output |

| Also Known As | Max delay or t_PD | Min delay or t_CD |

Introduction to Propagation Delay and Contamination Delay

Propagation delay refers to the time interval required for a digital signal to travel from the input to the output of a circuit, affecting overall timing performance. Contamination delay is the minimum time before a change in input can start to affect the output, critical for hazard and glitch analysis in synchronous systems. Both delays play essential roles in timing analysis to ensure reliable digital circuit operation and prevent setup and hold time violations.

Defining Propagation Delay

Propagation delay refers to the time taken for a digital signal to travel from the input to the output of a logic gate, representing the interval required for the output to reflect an input change. This delay impacts the overall speed and timing performance of digital circuits, directly influencing clock frequency and synchronization. Unlike contamination delay, which measures the minimum time before an output may start to change, propagation delay denotes the maximum duration for the output to stabilize after an input transition.

Defining Contamination Delay

Contamination delay refers to the minimum time interval from an input change until the output starts to change, marking the earliest moment an output can become invalid. It contrasts with propagation delay, which measures the maximum time for the output to settle to its final stable state after an input transition. Understanding contamination delay is crucial for designing reliable digital circuits, as it determines the potential for glitches and timing violations in your system.

Key Differences Between Propagation and Contamination Delay

Propagation delay measures the total time taken for a signal to travel from the input to the output of a digital circuit, determining the maximum speed at which the circuit can operate. Contamination delay refers to the minimum time after an input change before the output starts to change, highlighting potential glitch or hazard issues in timing analysis. Understanding the key differences between propagation and contamination delay is crucial for designing reliable synchronous circuits and ensuring proper setup and hold times.

Importance of Timing in Digital Circuits

Propagation delay determines the time taken for a signal to propagate through a digital circuit, directly impacting the maximum operating frequency and ensuring correct data synchronization. Contamination delay represents the minimum time before which the output may begin to change, critical for avoiding race conditions and glitches in sequential circuits. Accurate management of both delays is essential for reliable timing analysis and optimal performance in high-speed digital system designs.

Factors Affecting Propagation Delay

Propagation delay is influenced by factors such as load capacitance, transistor switching speed, and interconnect resistance, which directly impact the time it takes for a signal to travel through a gate or circuit. While contamination delay represents the minimum time before output can change, propagation delay varies based on the physical and electrical properties of the device and circuit environment. Understanding these factors helps you optimize circuit performance by minimizing delays and ensuring reliable timing in digital designs.

Factors Affecting Contamination Delay

Contamination delay in digital circuits is primarily affected by factors such as transistor threshold voltage variations, process variations during fabrication, and temperature fluctuations, which influence the minimum time before the output begins to change. Interconnect parasitic capacitances and resistances also contribute to contamination delay by altering the charge and discharge rates of circuit nodes. Unlike propagation delay, contamination delay depends heavily on the fastest path through the logic and early switching behavior of transistors.

Real-World Examples and Applications

Propagation delay in microprocessors affects the timing of signal transmission between logic gates, critical in high-speed computing devices like CPUs and GPUs where nanosecond precision ensures data integrity. Contamination delay, the minimum time for a signal to begin changing, is vital in safety-critical systems such as automotive airbag controllers to prevent premature triggering. Understanding both delays helps you optimize circuit design for reliability in real-world applications like telecommunications infrastructure and real-time control systems.

Impact on Circuit Design and Performance

Propagation delay determines the total time a signal takes to travel through a circuit, directly impacting the maximum operating frequency and overall speed of your digital design. Contamination delay affects the earliest time unwanted glitches or hazards can appear, influencing the reliability and timing margin of sensitive sequential elements. Understanding both delays is crucial for optimizing circuit timing, avoiding race conditions, and ensuring stable performance in high-speed digital systems.

Best Practices for Managing Delays in Digital Systems

Propagation delay, the time taken for a signal to travel through a digital circuit, and contamination delay, the minimum time before an output begins to change after an input change, must both be carefully managed to ensure reliable system performance. Best practices for controlling these delays include using proper gate sizing, minimizing parasitic capacitance, and optimizing routing paths to reduce signal latency. Your digital design can achieve higher speed and stability by applying timing analysis tools and incorporating guard bands to accommodate variations in environmental conditions and manufacturing processes.

Propagation Delay vs Contamination Delay Infographic

electrown.com

electrown.com