Pipelining increases instruction throughput by overlapping the execution phases of multiple instructions, while parallelism executes multiple instructions simultaneously across multiple processors or cores to enhance performance. Explore the rest of the article to understand how these techniques can optimize Your system's efficiency.

Table of Comparison

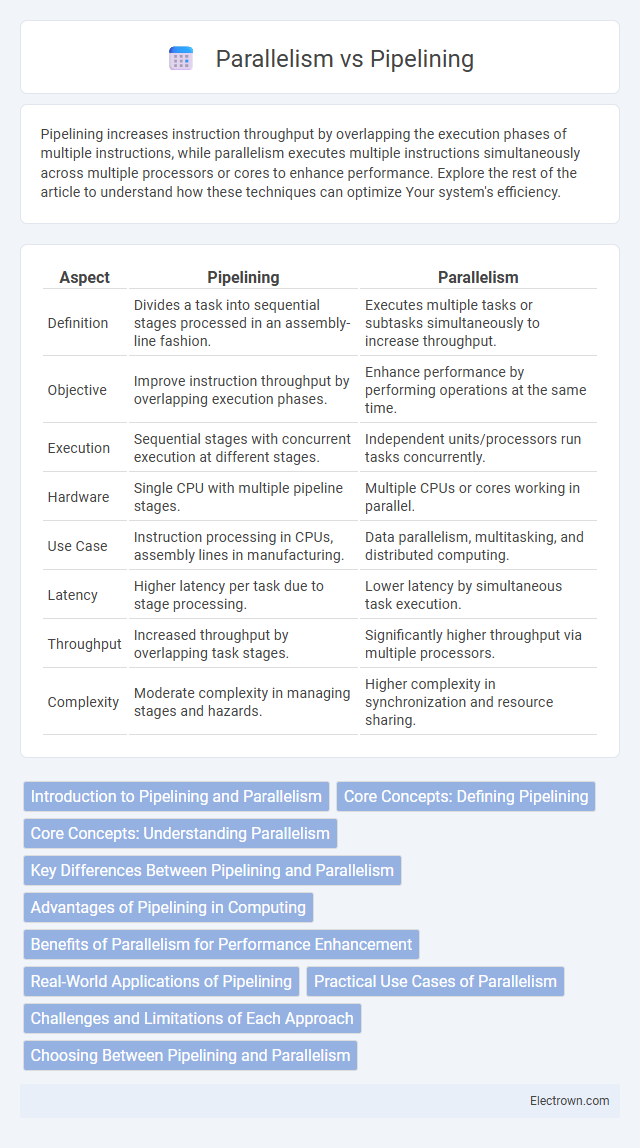

| Aspect | Pipelining | Parallelism |

|---|---|---|

| Definition | Divides a task into sequential stages processed in an assembly-line fashion. | Executes multiple tasks or subtasks simultaneously to increase throughput. |

| Objective | Improve instruction throughput by overlapping execution phases. | Enhance performance by performing operations at the same time. |

| Execution | Sequential stages with concurrent execution at different stages. | Independent units/processors run tasks concurrently. |

| Hardware | Single CPU with multiple pipeline stages. | Multiple CPUs or cores working in parallel. |

| Use Case | Instruction processing in CPUs, assembly lines in manufacturing. | Data parallelism, multitasking, and distributed computing. |

| Latency | Higher latency per task due to stage processing. | Lower latency by simultaneous task execution. |

| Throughput | Increased throughput by overlapping task stages. | Significantly higher throughput via multiple processors. |

| Complexity | Moderate complexity in managing stages and hazards. | Higher complexity in synchronization and resource sharing. |

Introduction to Pipelining and Parallelism

Pipelining improves processor throughput by dividing a task into sequential stages, allowing multiple instructions to overlap in execution and increasing instruction-level parallelism. Parallelism enhances computation speed by executing multiple tasks or instructions simultaneously across multiple processors or cores, leveraging hardware resources for concurrent processing. Both techniques optimize performance by exploiting different forms of concurrency within computer architecture.

Core Concepts: Defining Pipelining

Pipelining is a technique in computer architecture where multiple instruction phases are overlapped by dividing the execution process into discrete stages, such as fetch, decode, and execute. Each stage processes a different instruction simultaneously, increasing instruction throughput without reducing the execution time of individual instructions. This contrasts with parallelism, which involves executing multiple instructions concurrently through multiple processing units.

Core Concepts: Understanding Parallelism

Parallelism involves executing multiple operations simultaneously by dividing tasks into independent units that run concurrently, enhancing overall system performance. Pipelining breaks a process into sequential stages where different stages execute in parallel, improving throughput by overlapping instruction execution. Your understanding of parallelism is crucial for optimizing system design and effectively leveraging hardware capabilities for faster computation.

Key Differences Between Pipelining and Parallelism

Pipelining divides a process into sequential stages where each stage processes a part of the task simultaneously with others, improving throughput but not reducing the latency of individual tasks. Parallelism executes multiple tasks or processes simultaneously across multiple processors or cores, significantly decreasing overall execution time by handling different operations concurrently. Your choice depends on whether you aim to increase the speed of task completion (parallelism) or improve instruction throughput in a single task (pipelining).

Advantages of Pipelining in Computing

Pipelining enhances computing efficiency by allowing multiple instruction phases to overlap, significantly increasing throughput without requiring additional processing units. This technique reduces the average instruction execution time and optimizes CPU resource utilization, leading to faster program execution. Your system benefits from improved performance and energy efficiency due to the streamlined instruction handling provided by pipelining.

Benefits of Parallelism for Performance Enhancement

Parallelism significantly boosts performance by dividing tasks into multiple smaller sub-tasks that can be executed simultaneously across multiple processors or cores. This approach reduces overall execution time, enabling faster data processing and improved throughput for complex computations, especially in high-performance computing environments. Parallelism enhances scalability, making it ideal for workloads requiring extensive data handling and real-time responsiveness.

Real-World Applications of Pipelining

Pipelining enhances CPU performance by dividing instruction execution into discrete stages, enabling overlapping operations and increasing throughput in processors used in smartphones, gaming consoles, and data centers. Modern graphics processing units (GPUs) employ deep pipelining to handle complex rendering tasks efficiently, improving frame rates and visual quality in real-time applications. Your system's responsiveness in multitasking environments benefits from pipelined architectures, which optimize instruction flow and minimize latency in executing sequential tasks.

Practical Use Cases of Parallelism

Parallelism is widely used in high-performance computing applications such as scientific simulations, real-time data processing, and large-scale machine learning model training where multiple tasks run concurrently on multi-core processors or distributed systems. In cloud computing, parallelism enables efficient handling of vast amounts of data by dividing workloads across numerous servers to reduce latency and increase throughput. Graphics rendering and video processing also leverage parallelism to speed up complex calculations by executing multiple operations simultaneously on GPUs.

Challenges and Limitations of Each Approach

Pipelining faces challenges such as pipeline hazards, including data, control, and structural hazards, which can cause stalls and reduce efficiency. Parallelism struggles with limitations like synchronization overhead, resource contention, and difficulty in dividing tasks evenly across processors. Your choice between pipelining and parallelism depends on the balance between these constraints and the specific application requirements.

Choosing Between Pipelining and Parallelism

Choosing between pipelining and parallelism depends on your application's workload characteristics and performance goals. Pipelining improves throughput by breaking tasks into sequential stages, ideal for tasks with dependencies, while parallelism executes multiple tasks simultaneously, enhancing speed for independent operations. Understanding the trade-offs in latency, resource utilization, and complexity helps you optimize your system's efficiency and achieve desired performance outcomes.

Pipelining vs Parallelism Infographic

electrown.com

electrown.com