Direct mapped cache assigns each block of main memory to a specific cache line, ensuring fast access but increasing the risk of collisions and cache misses. Explore the rest of the article to understand how associative cache improves flexibility and reduces conflicts, enhancing Your system's performance.

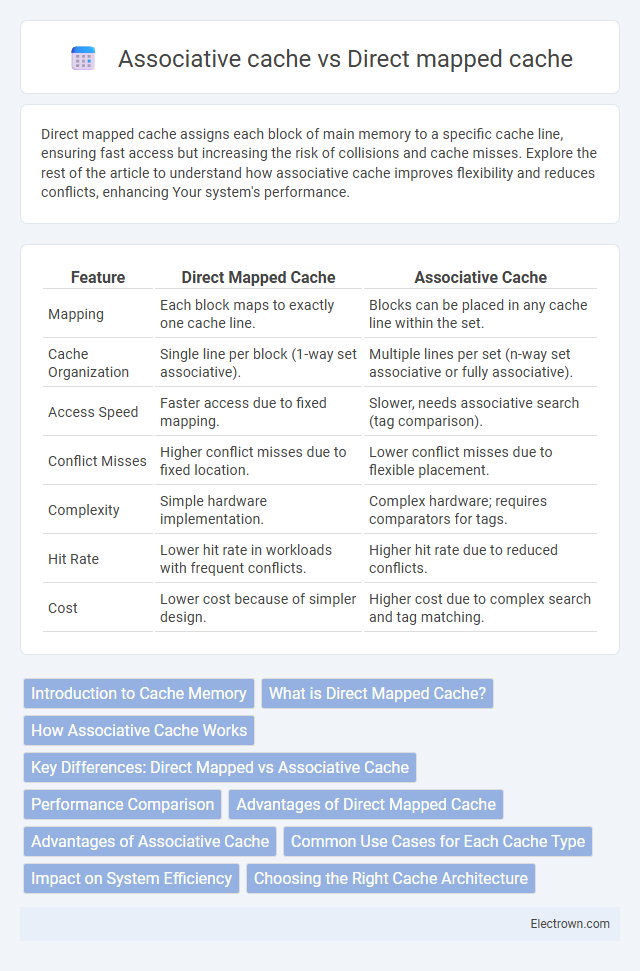

Table of Comparison

| Feature | Direct Mapped Cache | Associative Cache |

|---|---|---|

| Mapping | Each block maps to exactly one cache line. | Blocks can be placed in any cache line within the set. |

| Cache Organization | Single line per block (1-way set associative). | Multiple lines per set (n-way set associative or fully associative). |

| Access Speed | Faster access due to fixed mapping. | Slower, needs associative search (tag comparison). |

| Conflict Misses | Higher conflict misses due to fixed location. | Lower conflict misses due to flexible placement. |

| Complexity | Simple hardware implementation. | Complex hardware; requires comparators for tags. |

| Hit Rate | Lower hit rate in workloads with frequent conflicts. | Higher hit rate due to reduced conflicts. |

| Cost | Lower cost because of simpler design. | Higher cost due to complex search and tag matching. |

Introduction to Cache Memory

Cache memory improves CPU performance by storing frequently accessed data closer to the processor, reducing access time compared to main memory. Direct mapped cache assigns each memory block to a single, fixed cache line, enabling fast access but increasing the chance of conflict misses. Associative cache allows blocks to be stored in any cache line, significantly reducing conflict misses and improving hit rates at the cost of increased complexity and access time.

What is Direct Mapped Cache?

Direct mapped cache assigns each block of main memory to exactly one cache line based on a specific index derived from the memory address, allowing for faster and simpler data retrieval. This cache structure reduces complexity and improves speed but can suffer from cache conflicts when multiple blocks map to the same line, causing frequent replacements. Understanding how your system's direct mapped cache handles address mapping can help optimize memory access efficiency in computing tasks.

How Associative Cache Works

Associative cache operates by storing data blocks in any cache line, allowing the system to search all lines simultaneously using a tag comparison to find a match quickly. This method reduces conflict misses common in direct mapped cache by providing flexibility in data placement, improving hit rates and overall system performance. The hardware complexity and access time increase due to the parallel tag comparisons required in fully associative and set-associative caches.

Key Differences: Direct Mapped vs Associative Cache

Direct mapped cache assigns each memory block to exactly one cache line, resulting in simpler hardware and faster access but higher conflict misses. Associative cache allows a memory block to be placed in any cache line within a set, reducing conflict misses and improving hit rates at the cost of increased complexity and slower access. The key differences lie in placement flexibility, hardware complexity, and trade-offs between access speed and cache hit efficiency.

Performance Comparison

Direct mapped cache offers faster access times due to its simplicity, mapping each memory block to a single cache line which minimizes lookup latency. Associative cache improves hit rates by allowing multiple cache lines for a single address, reducing conflict misses but increasing search complexity and access time. Performance trade-offs depend on workload characteristics, with direct mapped caches excelling in predictable access patterns and associative caches benefiting memory-intensive tasks with frequent data reuse.

Advantages of Direct Mapped Cache

Direct mapped cache offers faster access times due to its straightforward one-to-one mapping between memory addresses and cache lines, reducing complexity in cache lookup processes. It requires simpler hardware, resulting in lower cost and power consumption compared to associative caches. The predictability of data placement in direct mapped caches also allows for more efficient cache management and easier implementation of cache policies.

Advantages of Associative Cache

Associative cache offers significant advantages including reduced cache miss rates due to its ability to store data in any cache line, unlike direct mapped cache which limits data placement to a single line. This flexibility enhances overall system performance by minimizing conflict misses and increasing hit rates. Moreover, associative cache improves processor efficiency in handling multiple simultaneous memory accesses, making it ideal for complex computing tasks.

Common Use Cases for Each Cache Type

Direct mapped cache is commonly used in embedded systems and applications requiring simple, fast cache lookups with minimal hardware complexity, such as microcontrollers and low-power devices. Associative cache, including fully associative and set-associative caches, is favored in general-purpose processors, high-performance computing, and systems where reducing cache misses and improving hit rates is critical, like modern CPUs and servers. The choice between direct mapped and associative cache influences system performance based on workload characteristics and hardware constraints.

Impact on System Efficiency

Direct mapped cache offers faster access times due to its simpler indexing mechanism but suffers from higher conflict misses, reducing system efficiency in workloads with frequent data collisions. Associative cache reduces conflict misses by allowing multiple blocks per set, improving hit rates and system efficiency, especially in programs with irregular memory access patterns. The trade-off between complexity and hit rate directly impacts overall system performance, with associative caches providing better efficiency at the cost of increased hardware complexity and access latency.

Choosing the Right Cache Architecture

Choosing the right cache architecture depends on balancing speed, complexity, and hit rate. Direct mapped cache offers simplicity and faster access times by mapping each memory block to a single cache line, but it can suffer from higher conflict misses. Associative cache improves hit rates by allowing any memory block to be stored in multiple locations, enhancing performance for diverse workloads, making it ideal when Your system requires flexibility and reduced cache misses.

Direct mapped cache vs associative cache Infographic

electrown.com

electrown.com